In part one we looked at the difference between running an application on a local machine compared to running it on a remote machine on the internet. The second major part of cloud computing, aside from running applications remotely, is virtualization.

Virtualization is the technology that allows separation of physical computer infrastructure from the actual resources available to the user.

1. Desktop Virtualization

The most straightforward type of virtualization is running a virtual operating system on your home computer. This is easy to try for yourself: download a copy of Oracle’s VirtualBox, setup a new machine, and you have a virtual computer “inside” this piece of software, and you can choose to allocate a certain amount of processor cores, memory and hard disk space. You can attach resources from the host computer, such as USB devices. Attach your CD drive with a copy of an operating system on it, and you can install a completely separate OS – I’ve got Ubuntu Linux running as a virtual machine on my Windows 10 host in the picture below. This uses Oracle’s Virtual Box software, one of the most widely used for desktop virtualization:

The following illustration gives a diagrammatic representation of what this looks like:

This is handy for testing software or running software that only runs on certain systems, however the real power of virtualization is when it is applied to the following aspects of computing “in the cloud” – i.e. connected to the internet.

2. Server Virtualization (Network / Storage / Processing)

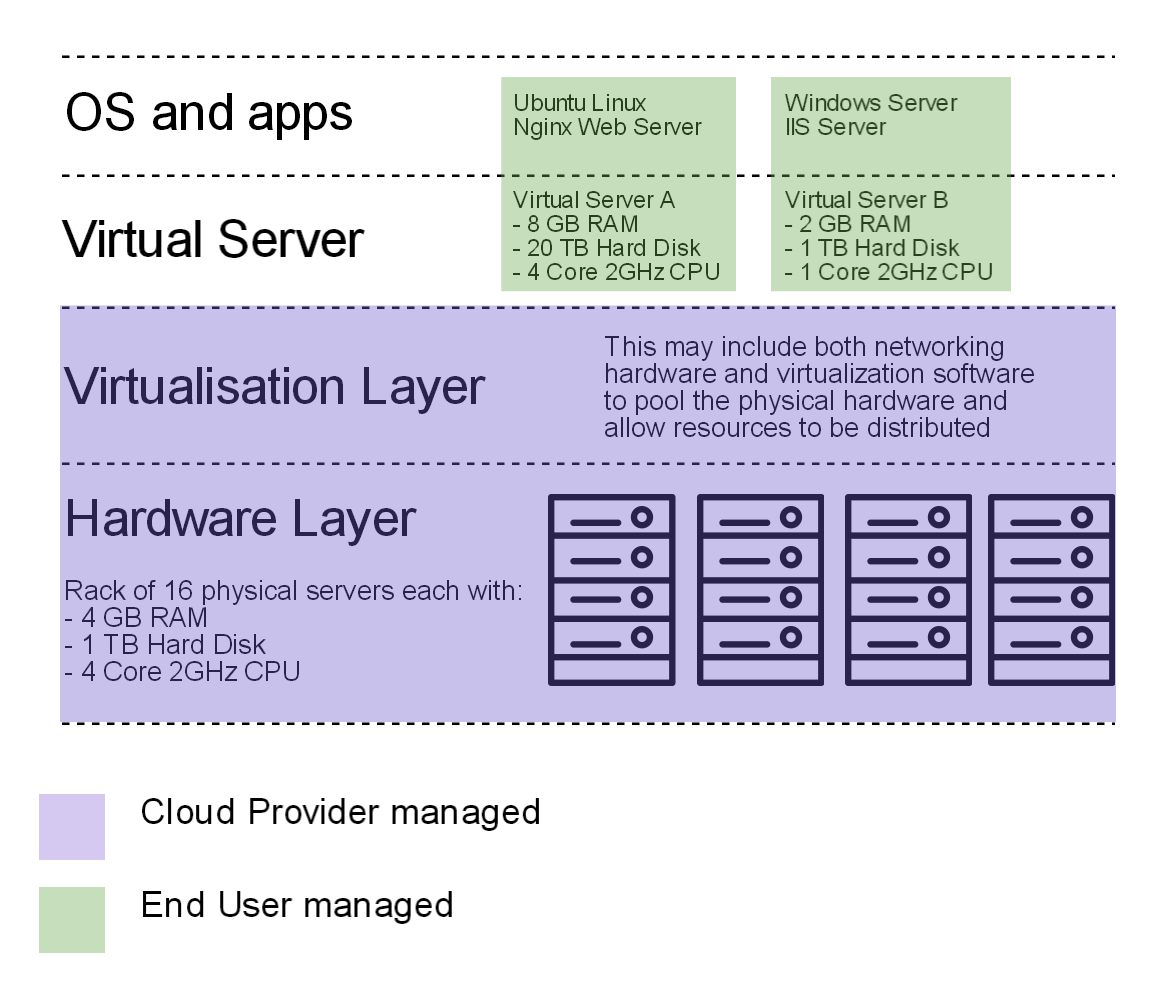

Whereas desktop virtualization allows a single physical computer to be split into multiple virtual systems, what I’m going to call “server” virtualization allows multiple physical components to be pooled together, and a specific set of virtual resources to be chosen from that. This means the virtual components are not limited by the maximum size of a single piece of hardware. For example, you can have a virtual server with 16 core CPU for doing some very intensive video processing, but the underlying physical components may be built up of a rack of 4 core servers. This is illustrated in the following diagram:

This server hardware can be chosen and controlled in software. So you can choose the amount of memory, CPU cores, hard disk space, and even network bandwidth etc and as per your needs, and you have a virtual machine of the specifications of your choosing. After this you select an Operating System image to be loaded onto your new virtual server. Here’s an example of some choices of OS and virtual hardware from Digital Ocean (referral link), a virtual server platform that I run a couple of websites on:

Some would argue that Digital Ocean is not truly a “cloud” platform in the way IAAS (Infrastructure as a Service) platforms are, which independently virtualize (and one could say commoditize) some very specific individual computing services without needing a complete virtual computer. We’ll look at IAAS in part 3.

3. Scalability

One of the major advantages virtualization brings is scalability, that is, since even hardware resources are effectively controlled by software, they can be changed automatically and at high speed. The major example of this is a web server which has a large variance in the amount of traffic it receives. Let’s say I host AndyPi.co.uk on my home computer or a raspberry pi. That’s easily possible – I’m only getting maybe 100 hits per day. But what happens if one of my articles becomes really famous and is the top rank on Google, and I’m getting 1000’s of hits every second? I only have a fixed amount of memory, processing speed and network bandwidth – which will very quickly get used up, slowing my internet to a crawl and crashing the computer. By the time I’ve gone to the shop, bought and installed a faster computer and upgraded my network, everyone has either read or got bored of my article, and my internet traffic has dropped back to a low level. Not only was I too slow to react to the peak demand, but I’ve wasted possibly thousands of pounds on hardware that I needed for maybe a day. With virtualization, I can automatically change my hardware configuration on the fly, as I monitor my usage. I can set my server to monitor CPU and memory usage – I can set it to a threshold of let’s say 80% of CPU for more than 10 minutes, and then it will add another CPU core! Or let’s say I’m about to run out of disk space or network bandwidth, I can set it to add more after a certain level. The same can be done in reverse, to reduce the amount of computing resources when the usage drops below a certain level. Usually this kind of service allows you to rent by the minute, so even running a hugely powerful 16-core server for an hour will cost me a few pence, not the thousands it cost to buy! Read this article for some more examples.