One of the best things about learning to code in Python is the ability to save yourself time by automating repetative tasks, or as Al Sweigart puts it, to “Automate The Boring Stuff”/.

When I was faced with having to cut a video into 70 plus different segments and create a new web page for each of them, the thought of having to do that manually in my video editing program and WordPress was a bit depressing at the amount of time it would take – and all very boring!

I already had the start and end time of the video segments and the description of each segment in an Excel sheet, so it was fairly easy to iterate over the rows, getting the start and end time for each video. I used these to create an FFMPEG command to run directly because I was already familar with that.

Here’s a simplified version of the code:

import os

import pandas as pd

dataframe = pd.read_excel(excelsheetpath)

for index, row in dataframe.iterrows():

starttime = row["Start time"]

endtime = row["End time"]

new_filename = str(row["Description"]) + "." + "mp4"

vid_cmd = "ffmpeg -i '{orig_filename}' -ss {starttime} -to {endtime} -copyts -acodec aac -vcodec copy '{new_filename}'".format(starttime=starttime, orig_filename=orig_filename, endtime=endtime, new_filename=new_filename)

os.system(vid_cmd)

Things that worked well:

– I used the copy parameter so FFMPEG doesn’t re-encode the video but just cuts the time segments I want – this makes the whole operation really fast.

Possible improvements:

– Although FFMPEG is fast, the way the options are setup to seek (ss) to the time occasionally caused the segmented video to miss the first or last second, which is because FFMPEG seeks quickly to the nearest keyframe in the orginal video, which is not necessarily the same as the timestamp (see here)

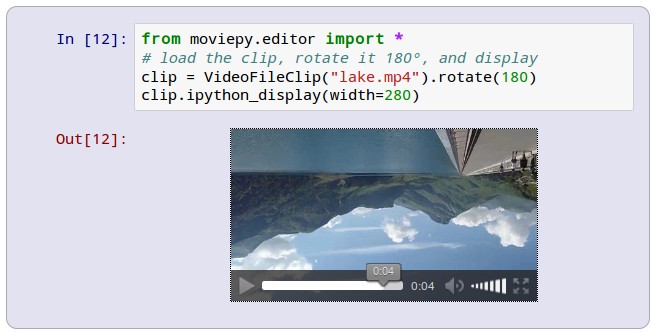

– I would have been better off starting with the Python package MoviePy, which is based on FFMPEG and can do allsorts of other interesting things as well – I could easily add a watermark logo to each video for example.

As for the webpages for each of the video, I used a very similar method. WordPress is great for loads of simple content type websites but making 70 odd individual posts that are almost identical is really time consuming. I’m sure there is a WordPress plugin to do something similar but as I was already doing something in Python it was easy to carry on. I could have used the Python templating engine Jinja2, but it seemed like overkill, so I just wrote the template page in html by hand and added some variables (in CAPITALS in the simplified code below), and then simply did a string replacement for each row in my spreadsheet which had the details of each video.

import pandas as pd

all_videos = pd.read_csv(pagecsv, encoding='utf-8')

def convert_id_to_filename(videoid):

return (str(videoid)).zfill(2) + '.html'

for index, video in all_videos.iterrows():

with open(template, "r+") as file:

working_html = file.read()

working_html = working_html.replace('NUMBER-OF-VID',(str(video["id"])).zfill(2))

working_html = working_html.replace('VIDEO-ID-NUMBER',video["url"])

working_html = working_html.replace('ENGLISH-TITLE',video["english"])

working_html = working_html.replace('PDF-LINK',(str(video["chapter"])).zfill(2))

working_html = working_html.replace('APP-DEEP-LINK',(str(video["appdeeplink"])))

file_name = exportfolder + convert_id_to_filename(video["id"])

with open(file_name, "w") as newfile:

newfile.write(working_html)

Neither of these are very advanced uses of Python, but making it into a script saved a ton of time, and that compounds over time – in this case the original video I actually used iniitally I grabbed a low resolution one – and switching it for the hi res one took no additional effort. For the web page template, I ended up changing this when I added the Open Graph Meta Tags to give a nice preview on social media when sharing the link. Then I added back and next links. Then some analytics tracking code… and all I needed to do each time I made the change was to add these on the template, and re-run the script to generate all 70 odd pages, and upload. Easy!

Leave a Reply