Github Actions is a CI/CD (Continuous Integration / Continuous Deployment) service built into Github. It makes code deployment as easy as pushing to a github repo. I’ve previously used Zappa which also has a fast way to deploy (and could be used in Github Actions), but for this task I didn’t need flask or any web access.

The task was to update an existing AWS lambda function that runs on an event schedule (cron), and remove all the manual deployment methods. I wanted to achieve:

- Zero effort deployment – no additional commands to update the code, just a git push – therefore use Github Actions

- All the deployment information as code in the github repo without setting up using the AWS web interface – therefore use the SAM (Serverless Application Model) from AWS to create a stack

- Separate out the 6 or 7 different tasks within that function whilst only having a single lambda function by calling it using a parameter to run a particular function – therefore I needed a way to set a input parameter from the Cron event, the trickiest bit!

Here is how I achieved it, with some description of the important aspects.

1. Folder Structure

Here is a simplified folder structure, which you need to create locally and then push to github.

|____ README.md |____ template.yaml |____ lambda_code | |____ __init__.py | |____ main_app.py | |____ requirements.txt |____ .github | |____ workflows | | |____ main.yml

2. AWS Lambda Python Code

The actual code running on AWS lambda is in /lambda_code/main_app.py and it contains a number of functions that are called based on the event parameter which we’re expecting to be provided as a dictionary {‘function_name’:’function_one}, this is looked up in the CLOUD_FUNCTIONS dictionary and the appropriate function run.

import various_packages

def function_one():

print('Running function one')

def function_two():

print('Running function two')

def lambda_handler(event, context):

CLOUD_FUNCTIONS = {

'function_one' : function_one,

'function_two': function_two,

}

try:

CLOUD_FUNCTIONS[event['function_name']]()

except Exception as e:

print(f'Error at {e}')

3. Dependency Packaging

The code has various packages as dependencies, so for this I created a python virtual environment, installed the packages, and then exported this list to requirements.txt so it can be zipped up and deployed to AWS. It’s important that requirements.txt is inside the lambda_code folder, as otherwise dependencies will not be picked up. There is nowhere in the AWS SAM configuration yaml (next step) that specifies this, you just have to use requirements.txt. You don’t have to create the zip file or a ‘lambda layer’. SAM does all this:

python3 -m venv env # create python virtual environment in env folder source env/bin/activate # activate virtual environment pip3 install various_packages # install packages as required pip3 list --format=freeze > requirements.txt # export list of packages

4. SAM stack creation template

The next step is to create a definition of the stack in AWS in template.yaml, which is comprised of one lambda function, and two event schedules which call the two different functions. This was the trickiest part and needed a bit of research especially finding out how to pass a parameter to the event variable in the lambda handler from the cron. It needs to be passed as a JSON string using the ‘Input’ parameter. We also need to specify the correct code_uri (the folder in the repo that has the code) and the schedule (here every 5 mins just for testing):

Transform: 'AWS::Serverless-2016-10-31'

Parameters:

APIUSERNAME:

Description: "Parameter passed via SAM cli"

Type: "String"

APIPASSWORD:

Description: "Parameter passed via SAM cli"

Type: "String"

Description: SAM template for cloud functions to update lambda and invoke by Cloudwatch

Resources:

Function:

Type: 'AWS::Serverless::Function'

Properties:

CodeUri: lambda_code/

FunctionName: cloud_function

Handler: main_app.lambda_handler

Runtime: python3.9

Timeout: 30

Events:

FunctionOneSchedule:

Type: Schedule

Properties:

Enabled: True

Schedule: cron(0/5 * * * ? *)

Input: '{"function_name": "function_one"}'

FunctionTwoSchedule:

Type: Schedule

Properties:

Enabled: True

Schedule: cron(0/5 * * * ? *)

Input: '{"function_name": "function_two"}'

Environment:

Variables:

API_USERNAME: !Ref APIUSERNAME

API_PASSWORD: !Ref APIPASSWORD

This template is processed by AWS SAM which is a cli tool and creates the stack on AWS from this definition. I decided not to install SAM locally, but you can do this for testing.

5. Github Actions Workflow

The final step is to create a Github Actions workflow (.github/workflow/main.yml). This tells github when to run the action (on push to the main branch), and the actions to perform, which include using a ubtuntu container, checkout the repo, setting up python, and AWS SAM and getting AWS credentials. These ‘actions’ are templates provided in github actions (you can add your own). The final steps are to run the sam build and sam deploy commands which have quite a few required options. It’s important to note that if you need to access environment variables inside the lambda code (API_USERNAME, API_PASSWORD in this example which should be stored as the lambda function’s environment variables), you need to add them in the sam deploy command (to get from github) here AND pass them through in the template above AND add them as a parameter reference at the top of the template as well AND make the name of the alphanumeric AND escape special characters in the password (which I failed to fix)… what a faff! It took me literally 21 commits on this part and in the end I gave up and removed the special characters from the parameters I was passing.

name: deploy cloud_functions to AWS lambda

on:

push:

branches:

- main

jobs:

deploy-to-aws:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- uses: actions/setup-python@v3

- uses: aws-actions/setup-sam@v2

- uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: us-east-1

# Build using SAM

- run: sam build --use-container --template-file template.yaml

# Deploy on AWS

- run: sam deploy --no-confirm-changeset --no-fail-on-empty-changeset --capabilities CAPABILITY_IAM --stack-name ZwCloudFunctionsStack --resolve-s3 --on-failure ROLLBACK --parameter-overrides ParameterKey=APIUSERNAME,ParameterValue=${{ secrets.API_USERNAME }} ParameterKey=APIPASSWORD,ParameterValue=${{ secrets.API_PASSWORD }}

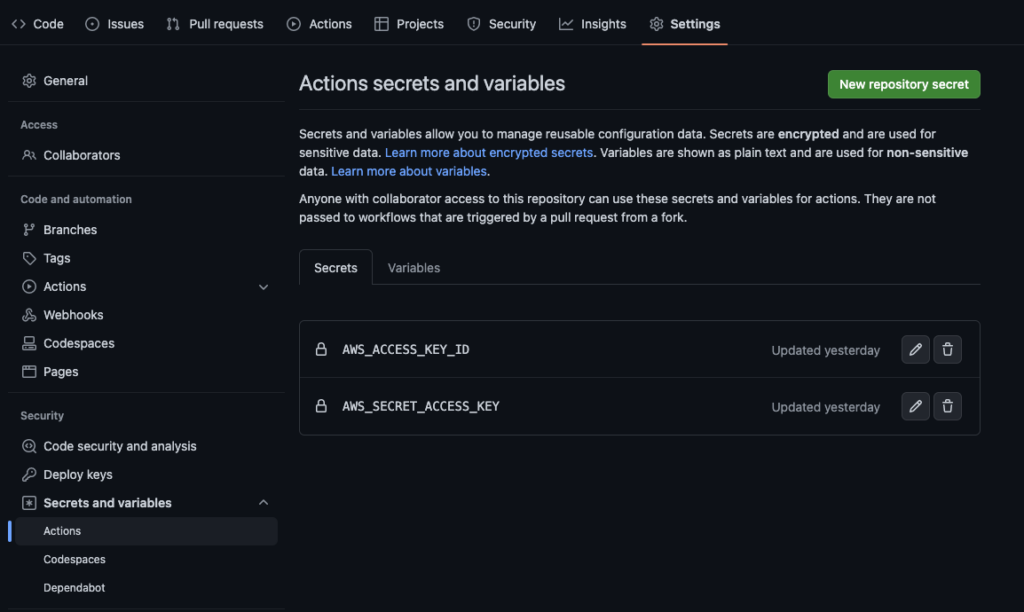

In order for this to work, you need to add some AWS credentials to your repo: Repo Settings > Secrets & Variables > Actions > New Repository Secret. You can get these from your AWS dashbord: IAM > User > Security Credentials tab > Access Keys > Create Access Keys.

That’s it! We can simply commit and git push the code to our repo, and you’ll be able to see the progress of the deployment and any errors on the repo’s ‘Actions’ tab. For any issues of the code itself, you can see the logs in AWS Cloudwatch. If you want to remove everything from AWS, you need to go to Cloudformation, and delete the entire stack from there.

With some help from the following blogs:

https://www.sufle.io/blog/aws-lambda-deployment-with-github-actions

https://github.com/aws/aws-sam-cli/issues/2034

https://stackoverflow.com/questions/66579433/how-to-add-environment-variables-in-template-yaml-in-a-secured-way